E3Gen: Efficient, Expressive and Editable Avatars Generation

Abstract

This paper aims to introduce 3D Gaussian for efficient, expressive, and editable digital avatar generation. This task faces two major challenges: (1) The unstructured nature of 3D Gaussian makes it incompatible with current generation pipelines; (2) the expressive animation of 3D Gaussian in a generative setting that involves training with multiple subjects remains unexplored. In this paper, we propose a novel avatar generation method named E3Gen, to effectively address these challenges. First, we propose a novel generative UV features plane representation that encodes unstructured 3D Gaussian onto a structured 2D UV space defined by the SMPL-X parametric model. This novel representation not only preserves the representation ability of the original 3D Gaussian but also introduces a shared structure among subjects to enable generative learning of the diffusion model. To tackle the second challenge, we propose a part-aware deformation module to achieve robust and accurate full-body expressive pose control. Extensive experiments demonstrate that our method achieves superior performance in avatar generation and enables expressive full-body pose control and editing.

Full Video

Method Overview

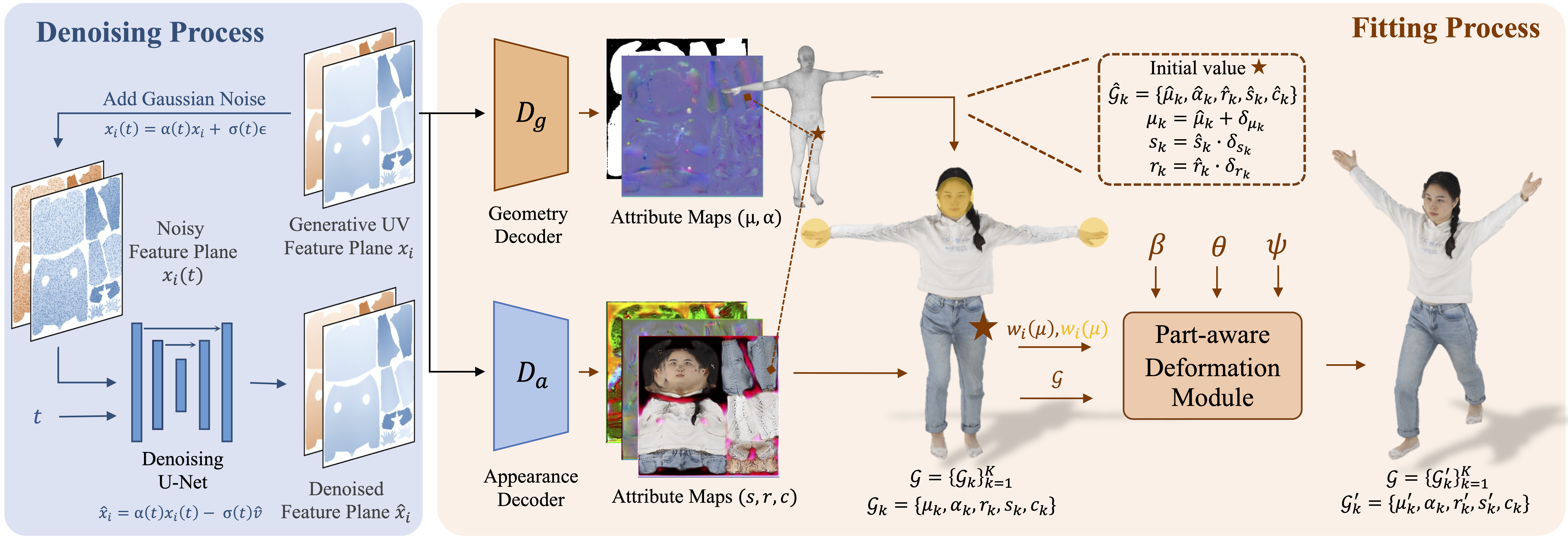

Our approach utilizes a single-stage diffusion model to simultaneously train the denoising and fitting process. The UV features plane, xi, is randomly initialized and optimized by both processes. In the denoising process, noise is added to the UV features plane and then denoised following a v-parameterization scheme using a denoising UNet. In the fitting process, the UV features plane is decoded into Gaussian Attribute maps, which are used to generate a 3D-Gaussian-based avatar in canonical space by fetching the corresponding attributes for the initialized Gaussian primitive. Finally, a part-aware deformation module is employed to deform the avatar into targeted pose based on SMPL-X parameters.

Random Generation

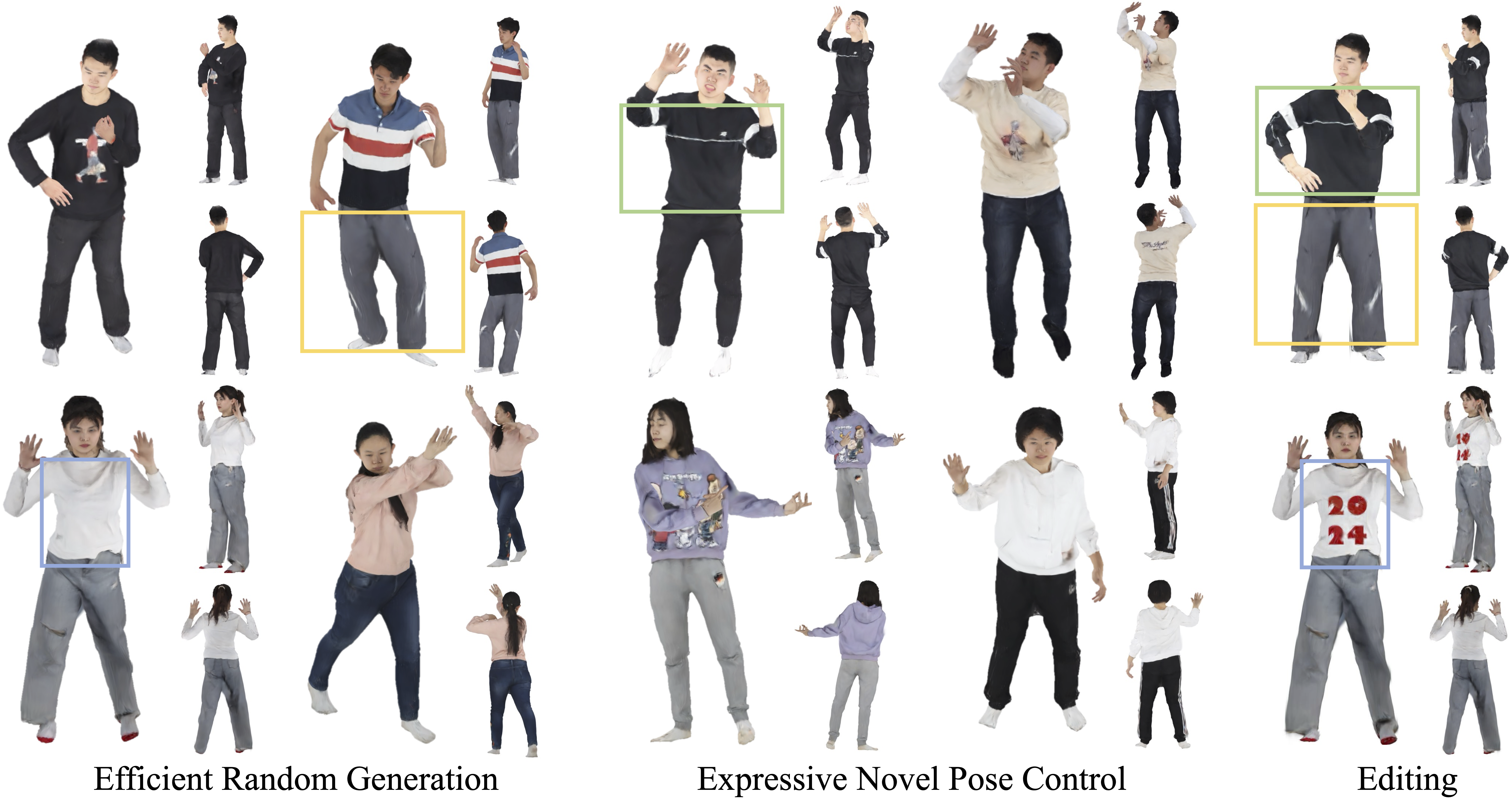

Our method can generate digital avatars with complex appearance and rich details, clearly exhibiting fingers and clothing wrinkles.

Novel Pose Animation

Our method exhibit robustly animation results to these challenging novel poses.

Editing

Our method enables local editing and partial attribute transfer. The edited results also support pose control.

Citation

@inproceedings{zhang2024e3gen,

author = {Zhang, Weitian and Yan, Yichao and Liu, Yunhui and Sheng, Xingdong and Yang, Xiaokang},

title = {E3Gen: Efficient, Expressive and Editable Avatars Generation},

year = {2024},

booktitle = {Proceedings of the 32nd ACM International Conference on Multimedia},

pages = {6860–6869},

numpages = {10},

series = {MM '24}

}